Hardware developers have missed out on the benefits of Docker and similar localized container solutions for one big reason: USB. Today we’re seeing how devices can start to reliably connect from the host system to the container.

I have a career-long passion (borderline obsession) with developer tools. I want them to be great and search for ways to make them better for every developer. All steps in the program / test / debug loop require access to devices via USB. For some time, Docker has supported USB device access via --device on Linux, but left Windows and macOS users in a lurch. As of Docker Desktop 4.35.0, it is now possible to access USB devices on Windows and macOS as well! It’s early days and caveats abound, but we can genuinely say Docker is starting to finally become a useful tool for hardware developers.

Why USB in Docker Matters

Working with USB devices inside containers adds a bit of complexity compared to native tools. They add a variable in the process and are yet another tool to manage. But there’s value in using containers for development: namely reproducibility and environment isolation. It’s also worth noting that USB device access isn’t only relevant to hardware devs; anyone using a USB-based peripheral like a midi controller also know the struggle.

On Linux, Docker can take advantage of native OS features to allow containerized processes to directly interact with USB devices. However, on Windows and macOS, Docker runs within a virtual machine (VM), meaning it doesn’t have direct access to the host’s hardware in the same way Linux does.

USB Limitations for macOS and Windows Users

For years, Windows and macOS users have faced a clunky workaround:

- Running Docker inside a Linux VM

- Passing USB devices through to that VM

- Passing them into Docker containers.

While this approach works, it is resource-intensive, slow, and far from seamless. Many developers voiced frustration over this in GitHub issues, such as this long-running thread that discusses the challenges and demands for native USB passthrough support.

In reality, most hardware developers don’t end up leveraging Docker for local development because of the lack of USB access, only relegating containers to CI/CD and test automation.

A Solution: USB/IP

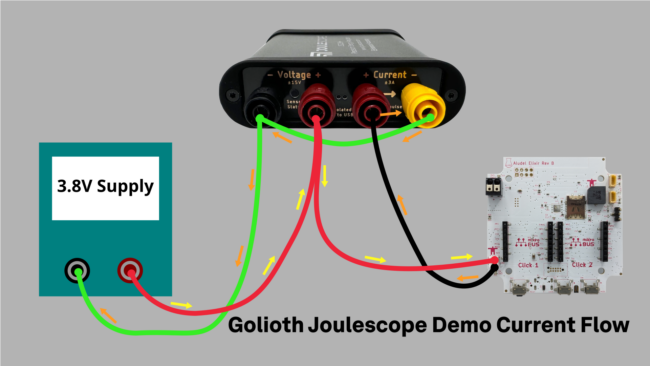

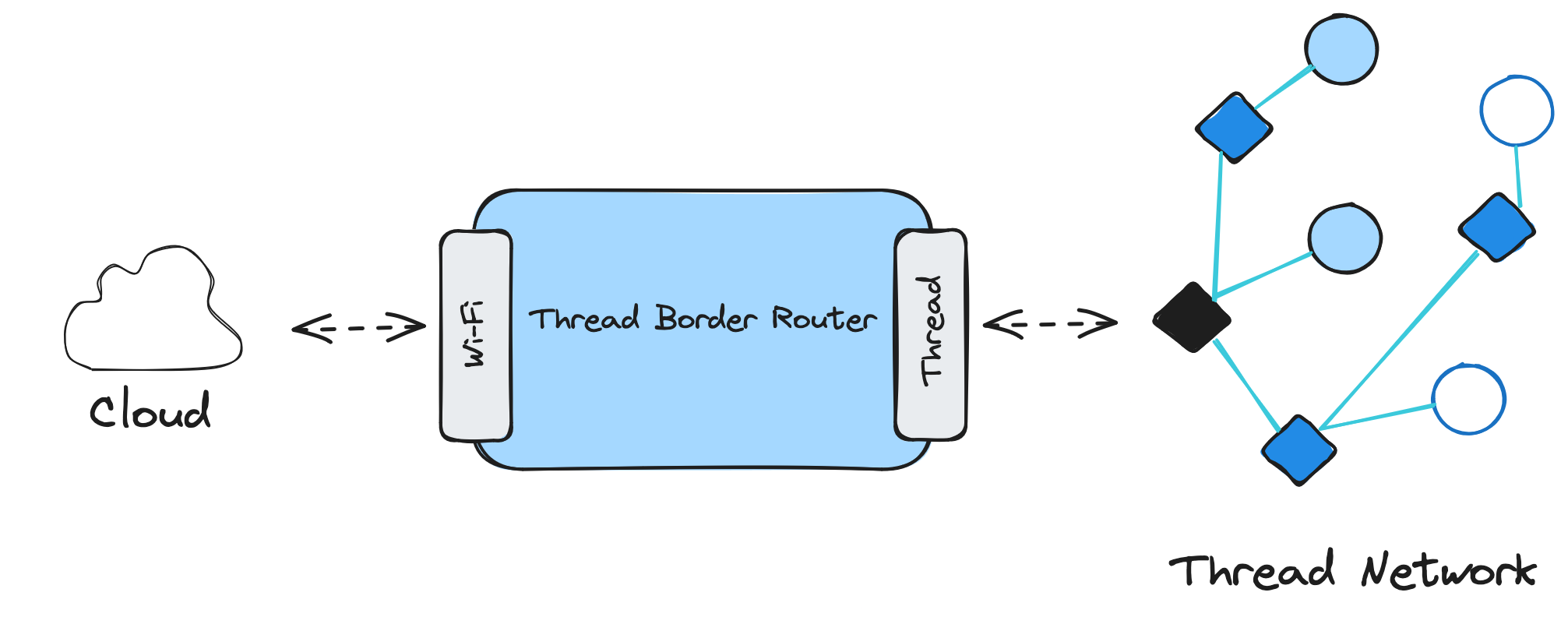

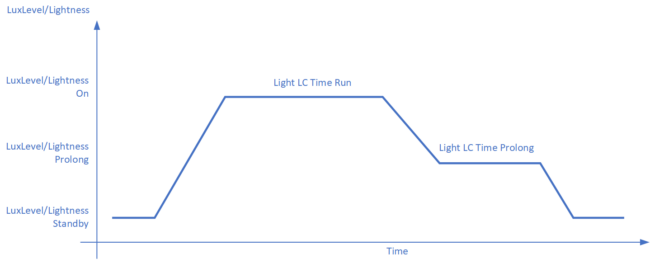

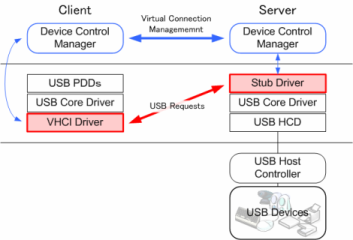

USB/IP is an open-source protocol that is part of the Linux kernel that allows USB devices to be shared over a network. By using a USB/IP server on the host system, USB devices can be exposed to clients (such as remote servers or containers) over the network. The client, running inside a Docker container, can access and interact with the device as though it were directly connected to the container.

Via https://usbip.sourceforge.net/

In theory, enabling USB/IP for Docker would allow USB devices to be accessed from within containers, bypassing the need for complex VM setups. This would simplify the experience, offering access to USB devices without the overhead of virtualization.

Making It Happen: Collaborating with Docker

While USB/IP provided the perfect solution for Docker’s USB problem, Docker hadn’t yet implemented USB/IP as part of its official features.

In February of this year, I reached out to the Docker team to see if it would be possible to integrate USB/IP into Docker Desktop. I got connected to Piotr Stankiewicz, a senior software engineer at Docker, to discuss the possibility of adding support for USB/IP. Piotr was immediately interested, and after a few discussions, we began collaborating on requirements.

Finally, after several months of work by Piotr and testing by me, Docker Desktop 4.35.0 was released, featuring support for USB/IP.

Key Concepts Before We Begin

Before diving into the steps for enabling USB device access, it’s important to understand the basic configuration. The key idea is that USB devices are passed through from the host system into a shared context within Docker. This means that every container on your machine could theoretically access the USB device. While this is great for development, be cautious on untrusted machines, as it could expose your USB devices to potentially malicious containers.

Setting Up a Persistent Device Manager Container

One of the best ways to manage this is by setting up a lightweight “device manager” container, which I’ll refer to as devmgr. This container will act as a persistent context for accessing USB devices, so you don’t have to repeat the setup every time you want to develop code in a new container. By keeping devmgr running, you can ensure that the USB device is always available to other containers as needed.

I’ve created a simple image (source) and pushed it to Docker Hub for convenience, though you can use your own image as a device manager.

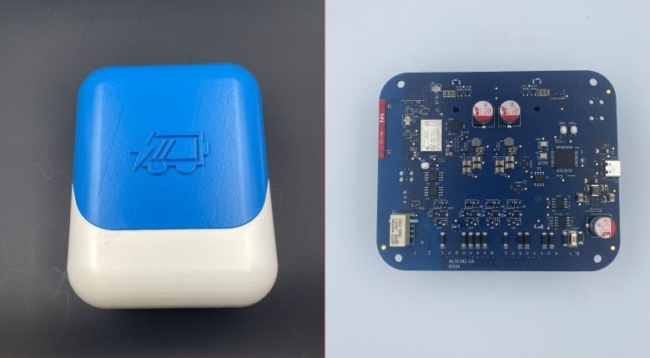

Serving up USB

Next we need to figure out how to expose USB devices from the host machine, aka “the server” in USB/IP terms. Windows already has a mature USB/IP server you may have not known about; it’s called usbipd-win and is what enables USB access on WSL (which I previously wrote about.) Unfortunately, macOS doesn’t have a complete server but I was able to use this Python library to program a development board (I also tried this Rust library but it’s missing support for USB to serial devices). Developing a USB/IP server for macOS is certainly one area the community can contribute!

With the pre-reqs out of the way, we can get going.

Getting Started with Windows

1. Install prerequisites

Make sure you install Docker, usbipd-win and any device drivers your USB devices might need.

2. Select a USB device to share

Open an admin Powershell and list out the available USB devices. Note the Bus ID as we’ll use that to identify the device next.

usbipd list

3. Share a USB device via USB/IP

Binding a device to the USB/IP server makes it available to Docker and other clients. You can confirm the device is shared by calling list again.

usbipd bind -b <BUSID>

Here’s an example of the output from my machine:

PS C:\Users\jberi> usbipd list Connected: BUSID VID:PID DEVICE STATE 2-1 10c4:ee60 CP2102 USB to UART Bridge Controller Not shared 2-3 06cb:00bd Synpatics UWP WBDI Not shared 2-4 13d3:5406 Integrated Camera, Integrated IR Camera, Camera DFU Device Not shared 2-10 8087:0032 Intel(R) Wireless Bluetooth(R) Not shared Persisted: GUID DEVICE PS C:\Users\jberi> usbipd bind -b 2-1 PS C:\Users\jberi> usbipd list Connected: BUSID VID:PID DEVICE STATE 2-1 10c4:ee60 CP2102 USB to UART Bridge Controller Shared 2-3 06cb:00bd Synpatics UWP WBDI Not shared 2-4 13d3:5406 Integrated Camera, Integrated IR Camera, Camera DFU Device Not shared 2-10 8087:0032 Intel(R) Wireless Bluetooth(R) Not shared Persisted: GUID DEVICE

4. Create a container to centrally manage devices

Start a devmgr container with the appropriate flags (--privileged --pid=host are key.)

docker run --rm -it --privileged --pid=host jonathanberi/devmgr

5. See which devices are available to Docker

In the devmgr container, we can list all the devices that the USB/IP client can grab (USB/IP calls it attaching.) One important note – we need to use host.docker.internal as the remote address to make Docker magic happen.

usbip list -r host.docker.internal

6. Attach a device to Docker

Now we want to attach the device shared from the host. Make sure to use the <BUSID> from the previous step.

usbip attach -r host.docker.internal -b <BUSID>

7. Verify that the device was attached

USB/IP can confirm the operation but since you now have a real-but-virtual device, ls /dev/tty* or lsusb should also work. You’ll need the /dev name for the next step anyway.

usbip port

Here’s another example of the output from my machine:

b6b86f127561:/# usbip list -r host.docker.internal

usbip: error: failed to open /usr/share/hwdata//usb.ids

Exportable USB devices

======================

- host.docker.internal

2-1: unknown vendor : unknown prodcut (239a:0029)

: USB\VID_239A&PID_))29\4445FEEF71F2274A

: unknown class / unknown subclass / unknown protocol (ef/02/01)

: 0 - unknown class / unknown subclass / unknown protocol (02/02/00)

: 1 - unknown class / unknown subclass / unknown protocol (0a/00/00)

: 2 - unknown class / unknown subclass / unknown protocol (08/06/50)

b6b86f127561:/# usbip attach -r host.docker.internal -b 2-1

b6b86f127561:/# usbip port

usbip: error: failed to open /usr/share/hwdata//usb.ids

Imported USB devices

======================

Port 00: <Port in Use> at Full Speed(12Mbps)

unknown vendor : unknown prodcut (239a:0029)

1-1 -> usbip://host.docker.internal:3240/2-1

-> remote bus/dev 002/001

b6b86f127561:/# lsusb

Bus 001 Device 001: ID 1d6b:0002

Bus 001 Device 009: ID 239a:0029

Bus 002 Device 001: ID 1d6b:0003

b6b86f127561:/# ls /dev/ttyA*

/dev/ttyACM0

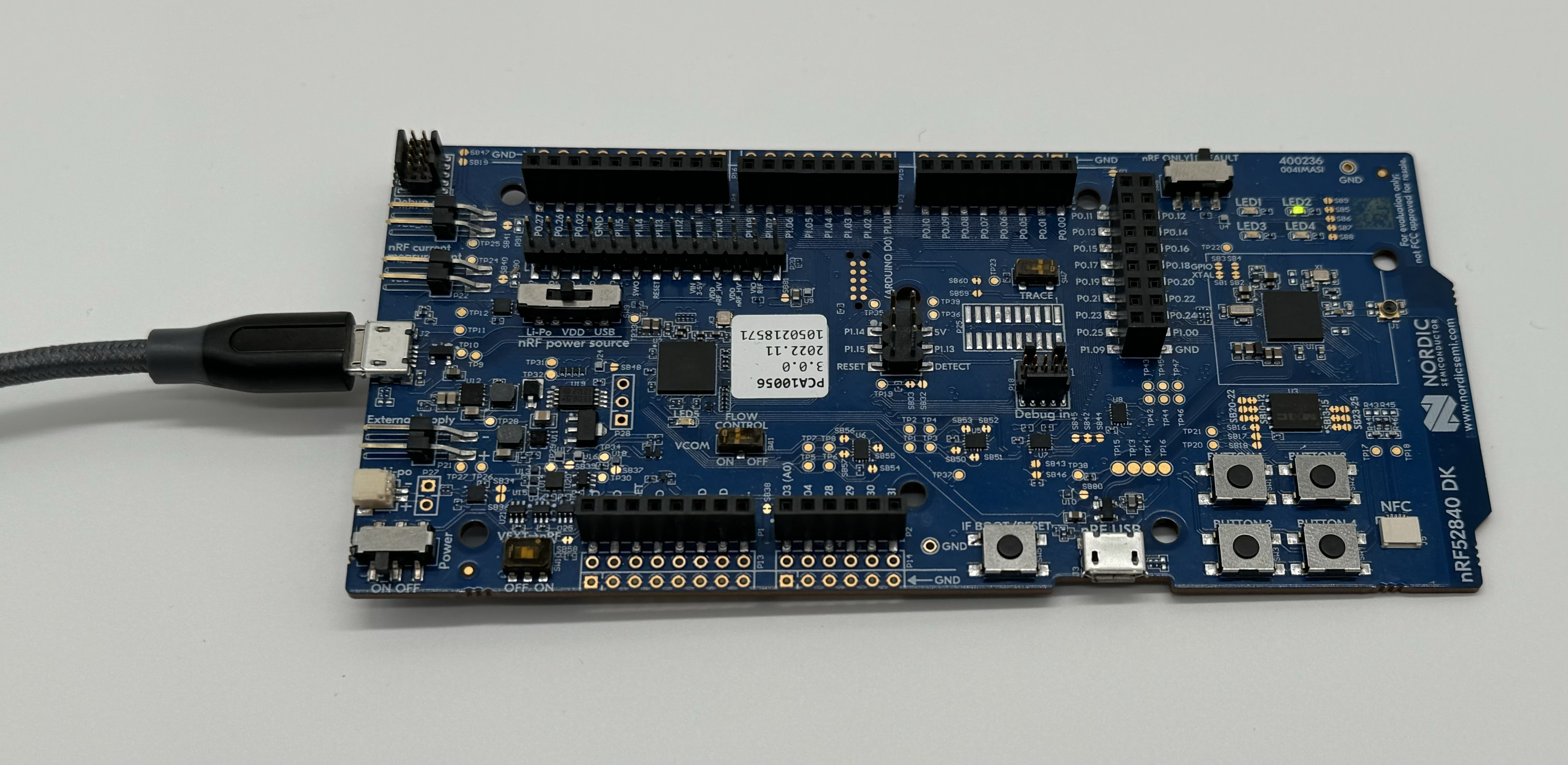

8. Use your newly-attached USB device

The moment of truth! We can finally use our USB device in a development container. From the Docker perspective it is a real USB device so you can use it to flash, debug, and twiddle bits. You can, of course, use it for non-development things like hooking up a MIDI* controller – but hardware is more fun, right?

There’s unlimited configurations here so I’ll just give an example of how I’d flash a Zephyr application using an image from github.com/embeddedcontainers. Note we’re passing the /dev with --device like we would on a Linux host.

docker run --rm -it -v ${PWD}:/workdir --device "/dev/ttyACM0" ghcr.io/embeddedcontainers/zephyr:arm-0.17.0SDK

Watching west flash work for the first time felt like ✨magic✨.

* One caveat with USB/IP is that Docker may need to enable device drivers for your particular hardware. Many popular USB to Serial chips are already enabled. File an issue on github.com/docker/for-win to request additional drivers or for any other related issues.

Getting Started with macOS

The steps for macOS are nearly identical to Windows except we need to use a different USB/IP server. I’ll use one that I know works with a CP2102. It’s still flaky and only partially implemented, so we need the community to pitch in!

git clone https://github.com/tumayt/pyusbip cd pyusbip python3 -m venv .venv source .venv/bin/activate pip install libusb1 python pyusbip

Watch as we flash and monitor an ESP32 running Zephyr in Docker!

As before, Docker may need to enable device drivers for your hardware, so file any issue at github.com/docker/for-mac.

Limitations to the USB/IP approach

Obviously the glaring issue here is the UX. The manual setup is clunky and each time a device reboots, it may need to be re-attached–auto attach works pretty well in usbipd-win, though! Also, we need a proper USB/IP server implementation for macOS. Lastly, device support may be limited based on driver availability.

However, I’m optimistic that these challenges can be overcome and that we will see solutions in short time (Open Source, ftw 💪.)

Conclusion

The ability to access USB devices within Docker on both Windows and macOS is a big leap forward for developers, hardware enthusiasts, and anyone working with USB peripherals. While there are still some setup hurdles and device limitations to overcome, this feature has the potential to streamline development and testing processes.

Give it a try and see how it works for you. Just keep in mind that it’s still early days for this feature, so you may run into some bumps along the way. Share your feedback on our forum, and let’s help improve the developer experience of hardware developers everywhere!