I built the Internet of Memes, making it possible for anyone at my company to push funny pictures to the ePaper display on the desk of all our coworkers.

I work at Golioth, but I don’t really work at Golioth. We’re headquartered in San Francisco but we’re also a completely remote company spread across the US, Brazil, and Poland. I love working with insanely talented and interesting people, but we don’t get to see each other face to face in the office each day. So at risk of “all work and no play”, I took on an after-hours project to make sharing memes with each other a bit more IRL.

ePaper is Standard-Issue at this Company

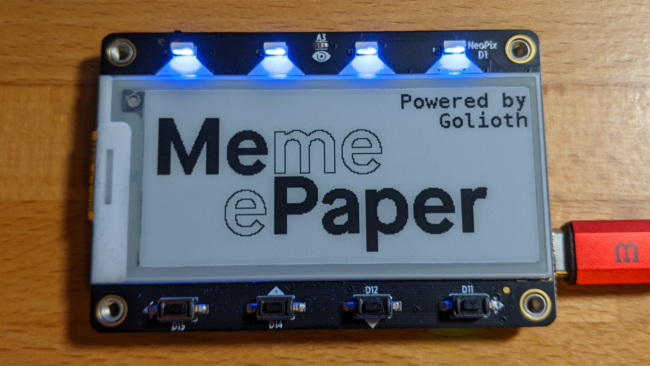

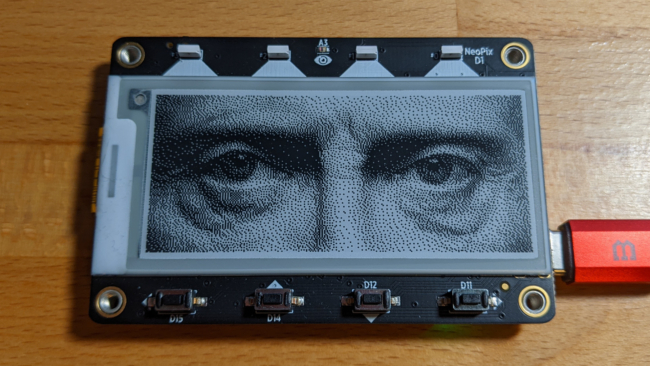

As part of onboarding, each new employee is shipped is a small development board that includes an ePaper display, RGB LEDs, buttons, a speaker, accelerometer, and WiFi. It’s Adafruit’s MagTag and we use it for developer training so it makes sense to hand one out to everyone when they join up. But the boards sit unused the vast majority of the time. Why not use that messaging real estate for something fun?

The project comes in two parts: firmware for the ESP32-S2 that drives the MagTag and a Slack Bot that handles the image publishing. Let’s jump into the device-side first.

Espressif’s ESP-IDF, Golioth’s Device Management, and Google Drive

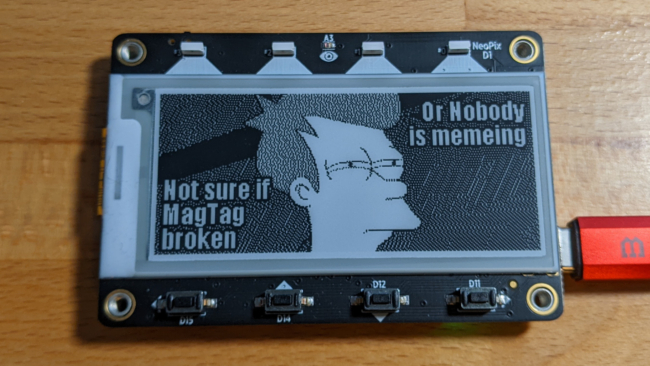

The device firmware got a bit of a head start because we have a MagTag-based demo that’s part of the Golioth Firmware SDK. The demo can already drive the hardware and use all Golioth features.

RAM considerations

The images for the display are 296×128 black and white so you need 4.7 kB of RAM to download an image. This was a challenge for the on-chip SRAM, but the ESP32-S2 WROVER module on these boards include a 2MB SPI PSRAM chip built into the module. I enabled this in Kconfig and used malloc() to place a framebuffer for the display in external ram.

#define FRAME_BUFFER_SIZE 5000 /* Get memory space for frame buffer */ fb = (uint8_t *) malloc(FRAME_BUFFER_SIZE);

The buffer is slightly oversized because it’s used to store an HTTP response that includes a bit of header data.

Leverage the ESP-IDF http_client

The next challenge was how to get the images onto the device. It is possible to repurpose Golioth’s OTA system to supply these images, but for reasons I’ll get to in the next section that’s is an inefficient approach. What I really want is a way to upload images to one single location and have many devices access them. That’s how webpages work, right?

I adapted the https_with_url() example from the ESP-IDF esp_http_client_example code. Just supply a URL to the image and the http(s) client will grab the page and dump it into the frame buffer.

void fetch_page(void) {

ESP_LOGI(TAG, "MEME FILE");

const char* url_string_p = mepaper_provisional_url;

esp_http_client_config_t config = {

.url = url_string_p,

.event_handler = _http_event_handler,

.crt_bundle_attach = esp_crt_bundle_attach,

.user_data = (char*)fb,

.timeout_ms = 5000,

};

esp_http_client_handle_t client = esp_http_client_init(&config);

esp_err_t err = esp_http_client_perform(client);

if (err == ESP_OK) {

ESP_LOGI(TAG, "HTTPS Status = %d, content_length = %d",

esp_http_client_get_status_code(client),

esp_http_client_get_content_length(client));

validate_and_display();

} else {

ESP_LOGE(TAG, "Error perform http request %s", esp_err_to_name(err));

xEventGroupSetBits(_event_group, EVENT_DOWNLOAD_COMPLETE);

}

esp_http_client_cleanup(client);

}

A question of image formats

What kind of image should this be, anyway? My friend Larry Bank has written a bunch of image decoders for embedded systems which I considered using. But I ended up going with an easier solution. A format I had never heard of before but that’s perfectly suited for a 1-bit-per-pixel display like this one is called PBM or “Portable Bit Map”.

The PBM specification is for a 1-bit color depth image. The byte-ordering and endianness is exactly what is needed for this ePaper display, and the header is super simple to parse. When the file is downloaded to the PSRAM, the firmware searches for a header. Once found, the data after that header is piped directly to the display. Voila!

static const uint8_t HEADER_PATTERN[] = { 0x31, 0x32, 0x38, 0x20, 0x32, 0x39, 0x36, 0x0A };

static const uint8_t WHITESPACE[] = { 0x0A, 0x0B, 0x0D, 0x20 };

static bool is_whitespace(uint8_t test_char) {

for (uint8_t i=0; i<WHITESPACE_SIZE; i++) {

if (test_char == WHITESPACE[i]) {

return true;

}

}

return false;

}

static bool headers_match(uint8_t *slice) {

// Spec is complicated by "whitespace" instead of a specific character

for (uint8_t i=0; i<HEADER_LEN; i++) {

if (i == WHITESPACE_IDX) {

if (is_whitespace(slice[i]) == false) {

return false; //this char must be whitespace

}

}

else if (HEADER_PATTERN[i] != slice[i]) {

return false; //members don't match

}

}

return true;

}

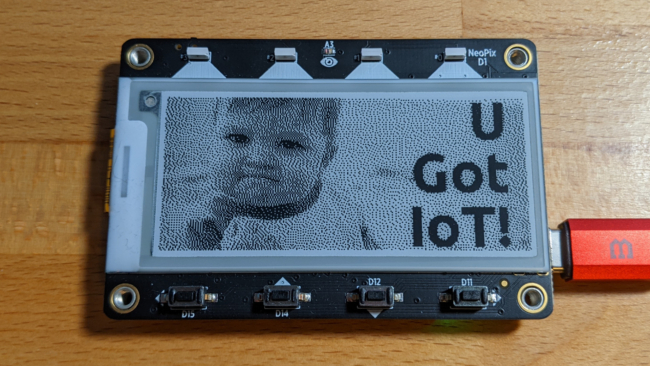

Images are created in your favorite editing software (I use GIMP). Set the mode to 1-bit depth size it to 296×128 (then rotate counterclockwise by 90 degrees) and export it to as PBM.

A Slack Bot Made of Python

With the device side proved out, I turned my mind to the “distribution” problem. How do I let different users control the fleet? Here is a list of consideration on my mind:

- How can users register their own devices for this service?

- How can users push their own images to the entire fleet?

- How will firmware upgrades be managed?

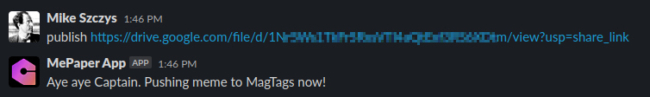

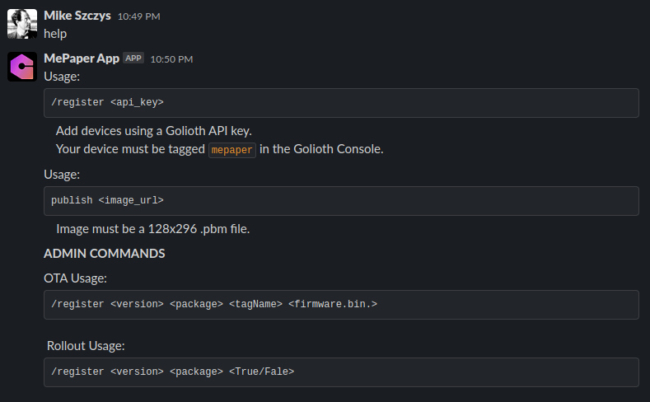

As a fully-remote company, we’re on Slack all day long. A Slackbot (a daemon that sits in the chat listening for commands) makes the most sense for granting some fleet control to everyone in the company.

How should the devices be provisioned so that they are all accessible by the bot? This topic could itself be a whole post. I decided the best way was to let each person add their MagTag to a Golioth project they control, assigning it the mepaper tag (“meme” + “epaper” = mepaper). They then share an API key to that project to receive notifications when there is a new image available for the screen. OTA firmware updates can also be staged for registered devices.

Starting from a stock MagTag, you download the latest firmware release and flash it to the board using esptool.py (the programming tool from Espressif). When the board boots, a serial shell is available via USB where WiFi credentials and Golioth device credentials can be assigned by the user. That’s it for setup, the bot takes care of the rest.

Each device is observing a mepaper endpoint on the Golioth LightDB State. It’s a URL to the image to display, and whenever it changes the boards get notified, then uses the https client to download them. The bot listens for a publish <url_of_image> message on the Slack channel, validates the file as existing and being in the proper format. Then the bot iterates through each API key it has stored, updating the LightDB State endpoint for each board tagged as mepaper.

This was chosen instead of pushing OTA artifacts for each image. That’s because the image would need to be uploaded multiple times (one for each project API-key registered) and a copy of all past memes would remain as artifacts. By uploading the images to our Google Drive, they are only stored one time, and all devices can access them as long as they have a copy of the share link.

Of course, as a quick and dirty hack, the original version of the firmware wasn’t the greatest. I made sure to use the OTA firmware upgrade built into our sample code. As with the image updates, users who the bot recognizes as admins can issue /register ota and /register rollout commands. These accept parameters like version, package name, and the filename of the new firmware. For security, the bot will only upload firmware that is located in the same directory as the bot itself.

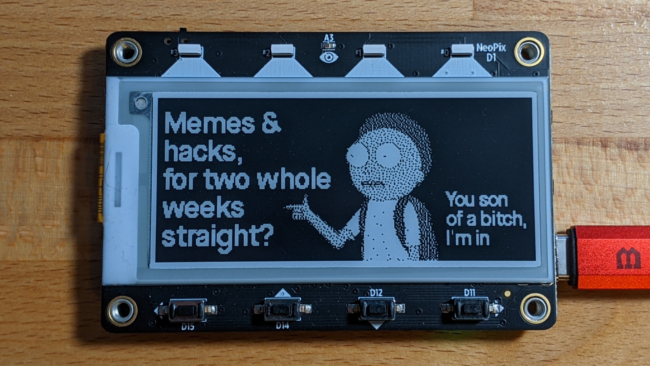

Oh the Fun of a Side-Channel for Memes!

Having a dedicated, non-intrusive screen for Memes turns out to be an excellent boost to the remote workplace vibe. Your computer doesn’t need to be unlocked, and you don’t need to switch context to see the newest message. The reason for pushing a meme doesn’t have to be meaningful, it is purely a mechanism for spreading joy. And it worked!

We’ve previously written about the Demo Culture at Golioth. The “Demo early, demo often” mantra is an effort to break out of a rut where you only communicate with your coworkers for Official Business™. Sending memes is one more way to be silly, and enjoy each others’ company, even if we can’t be in the same room. It’s also a neat way to eat our own dogfood.

Future Improvements

There’s lots of room to improve this project, but the first area I’ve been actively working on is the fleet organization itself. When starting out I wanted each user to be able to provision their own device so I chose to have users add the device to their project and share an API key with the bot using a private command on Slack. This an interesting application for controlling devices across multiple projects, but it’s overly complex for this particular application.

Bot-created, credentials, all on the same project

Currently, users need to generate credentials, assign them to the device via serial, and share an API key using the Slack bot. A better arrangement would have been to use the Slackbot to acquire the credentials in the first place. A future improvement will change the /register command to generate new PSK-ID/PSK credentials and send them to the user privately in Slack. This way all devices can be registered on one project, even though the user doesn’t have access to that project’s Golioth Console.

OTA is crucial to fleet management

With the fleet already deployed, how do you make these changes? Via OTA firmware update, of course. I have already implemented a key rotation feature using Golioth’s Remote Procedure Call. The bot can send each device a new PSK-ID/PSK, which will be tested and store, move each member of the fleet over to one singular project.