Prior to joining Golioth a little over a year ago, I remember being struck by the number of services and features offered by the platform. OTA updates, logging, time-series data storage, a real-time database, settings — the list goes on. Coming from a cloud services background, I felt certain that Golioth was unique amongst its peers in trying to offer every IoT service a product could possibly need, but as I continued to research competitors I was surprised to see that this was the norm, not the exception.

It seemed that every platform was racing to cover every service that an internet connected device could ever need. This has resulted in a confusing market for consumers, full of solutions with heavy overlap in service offerings. “Why does every platform feel the need to solve every problem?” I wondered to myself. In hindsight, the answer is obvious: connectivity.

In the cloud, where we reside in the luxurious confines of our precision cooled data centers, we don’t spend too much time sweating one more TCP connection. We’ll happily add a managed service to our architecture to avoid having to build something from scratch or, even more importantly, keep it running. In the world of embedded devices, we are not so fortunate.

A single device may be expected to run on battery for years, and network access may be limited, especially for devices in transit. For example, we’ve gone to great lengths to dramatically reduce how often some devices must perform DTLS handshakes. When deploying thousands, or millions of devices, the margins matter. It makes sense why a connected device platform would try to give you the kitchen sink on your one precious network connection. Still, I am skeptical of the ability of IoT-focused platforms to offer every cloud service you could ever need, and I feel pretty comfortable saying that considering we are building one ourselves! In other words, I am concerned that companies and individuals building connected device products are being prevented from using the right tool for the job.

A Platform for Building Platforms

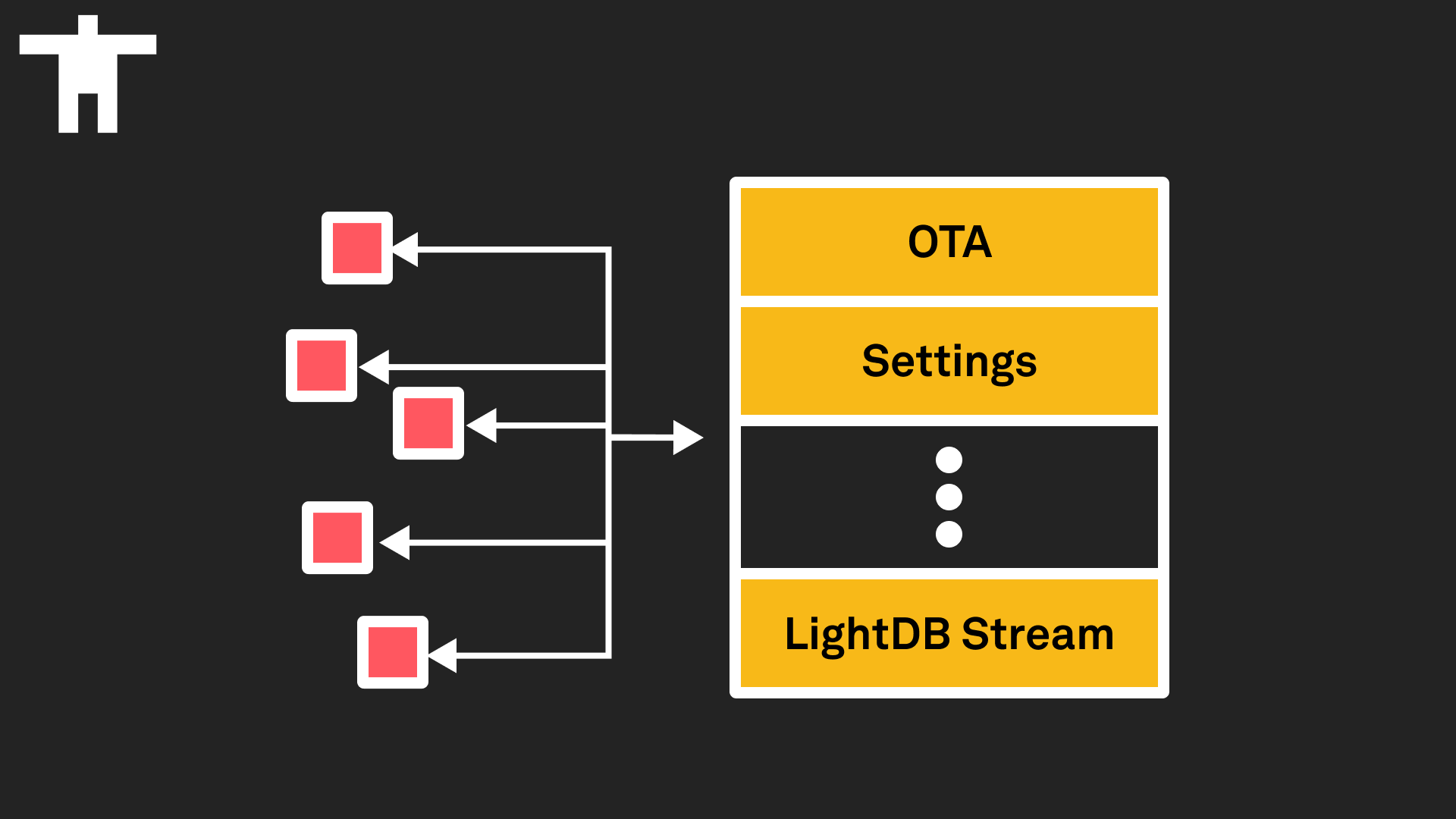

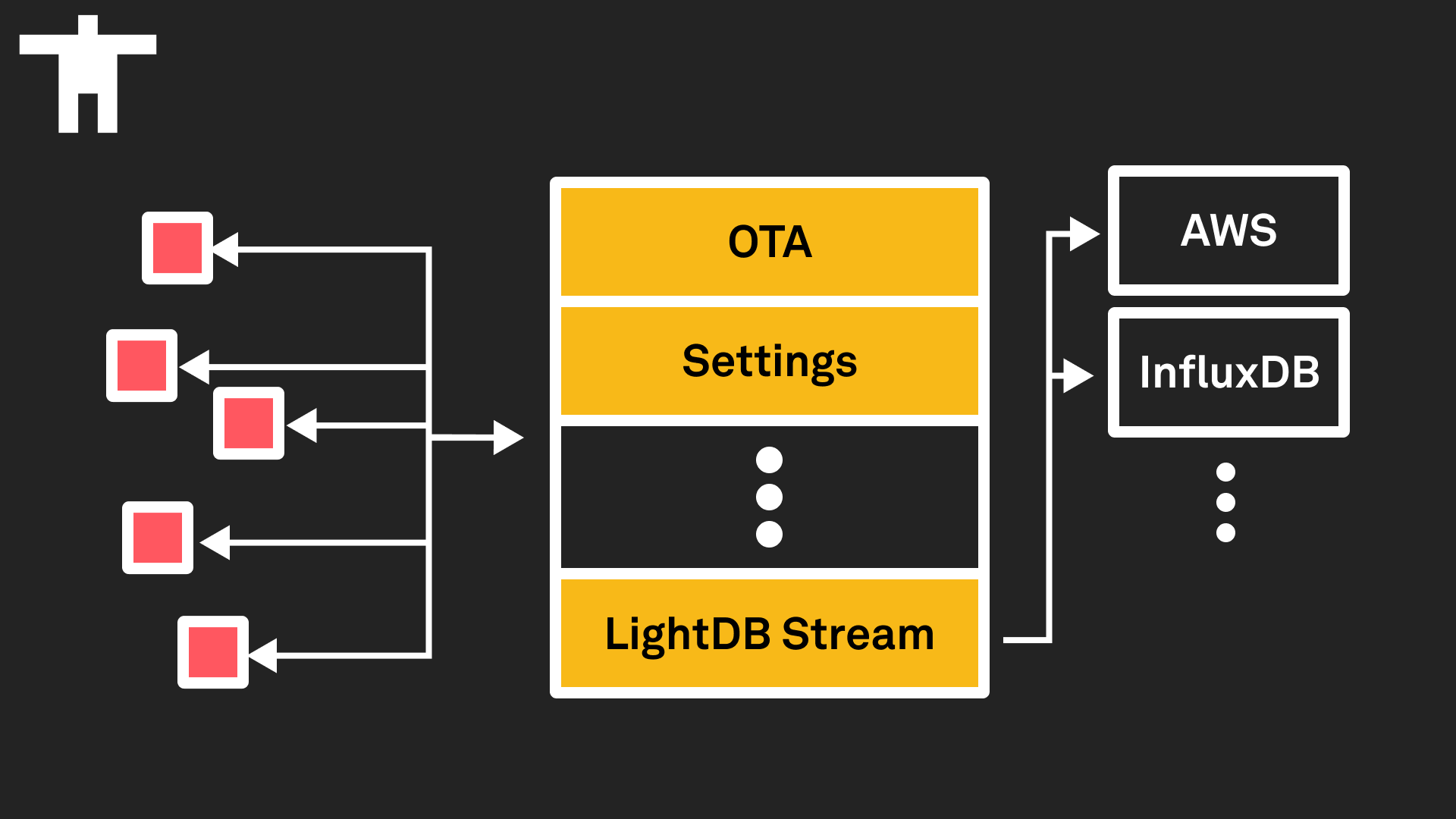

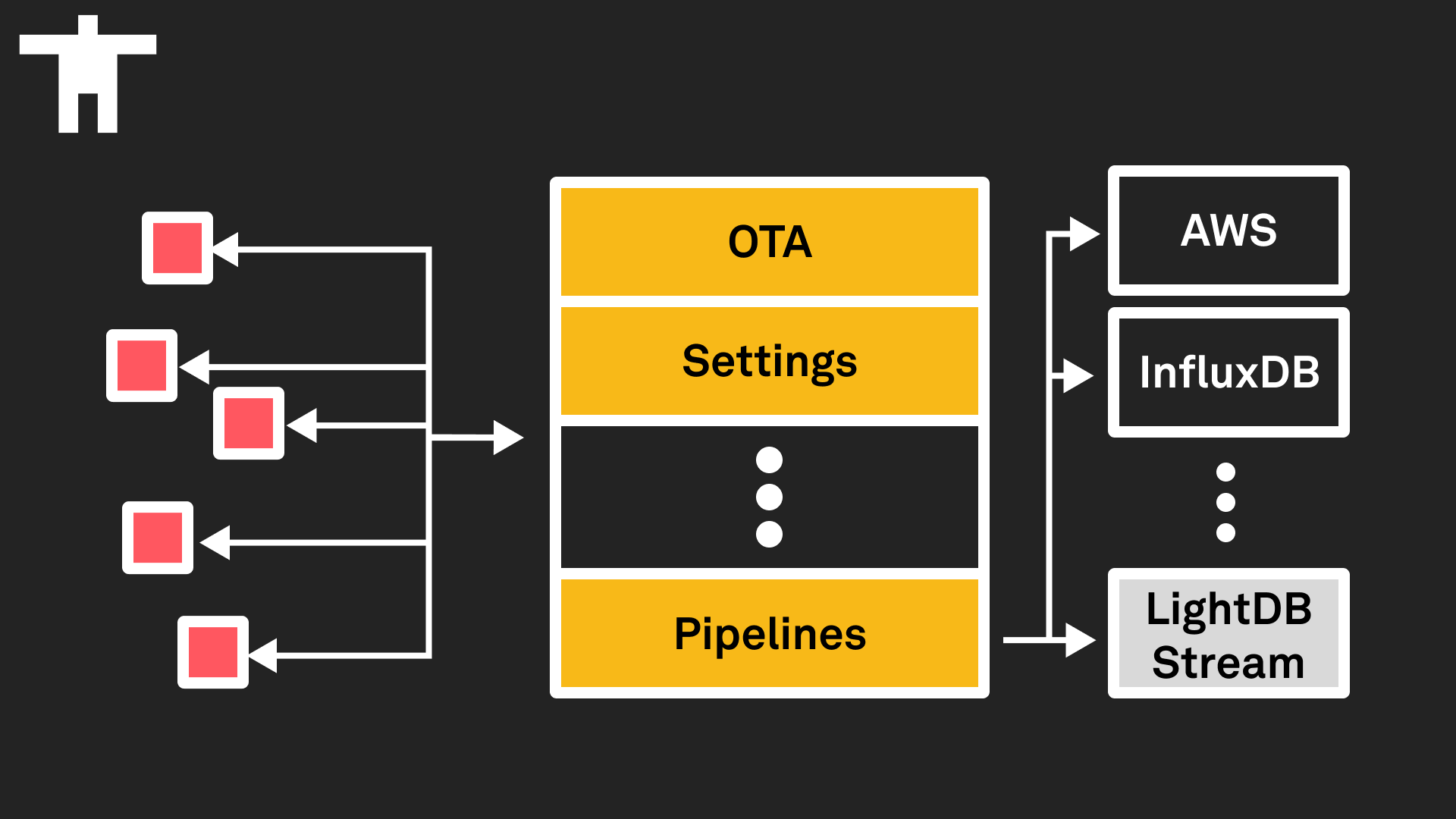

Last week, we announced a massive new feature of the platform: Golioth Pipelines. This isn’t just another new service. Rather, Pipelines makes it significantly easier to use services outside of Golioth. The easiest way to understand this change is by looking at the architecture before and after.

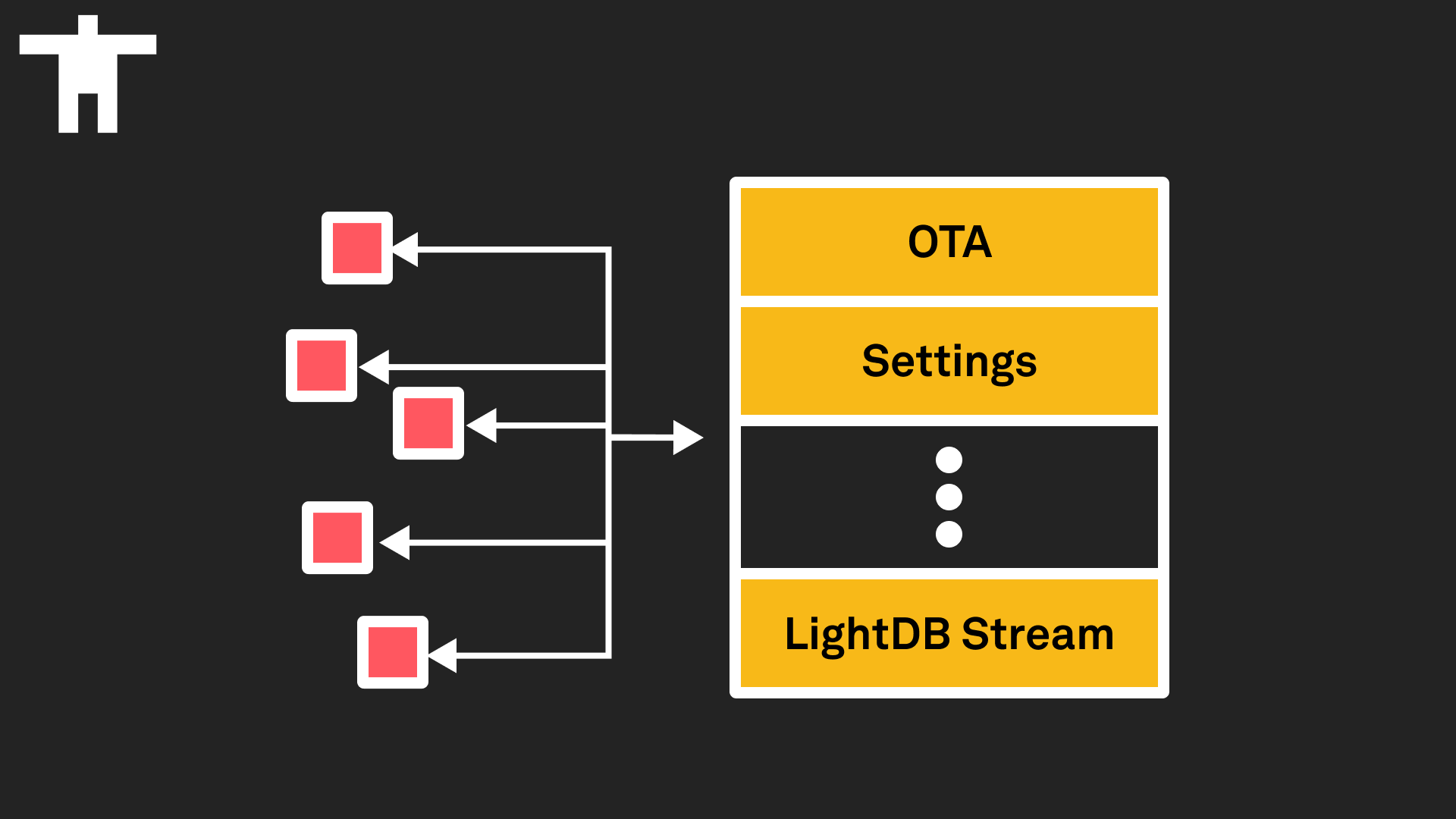

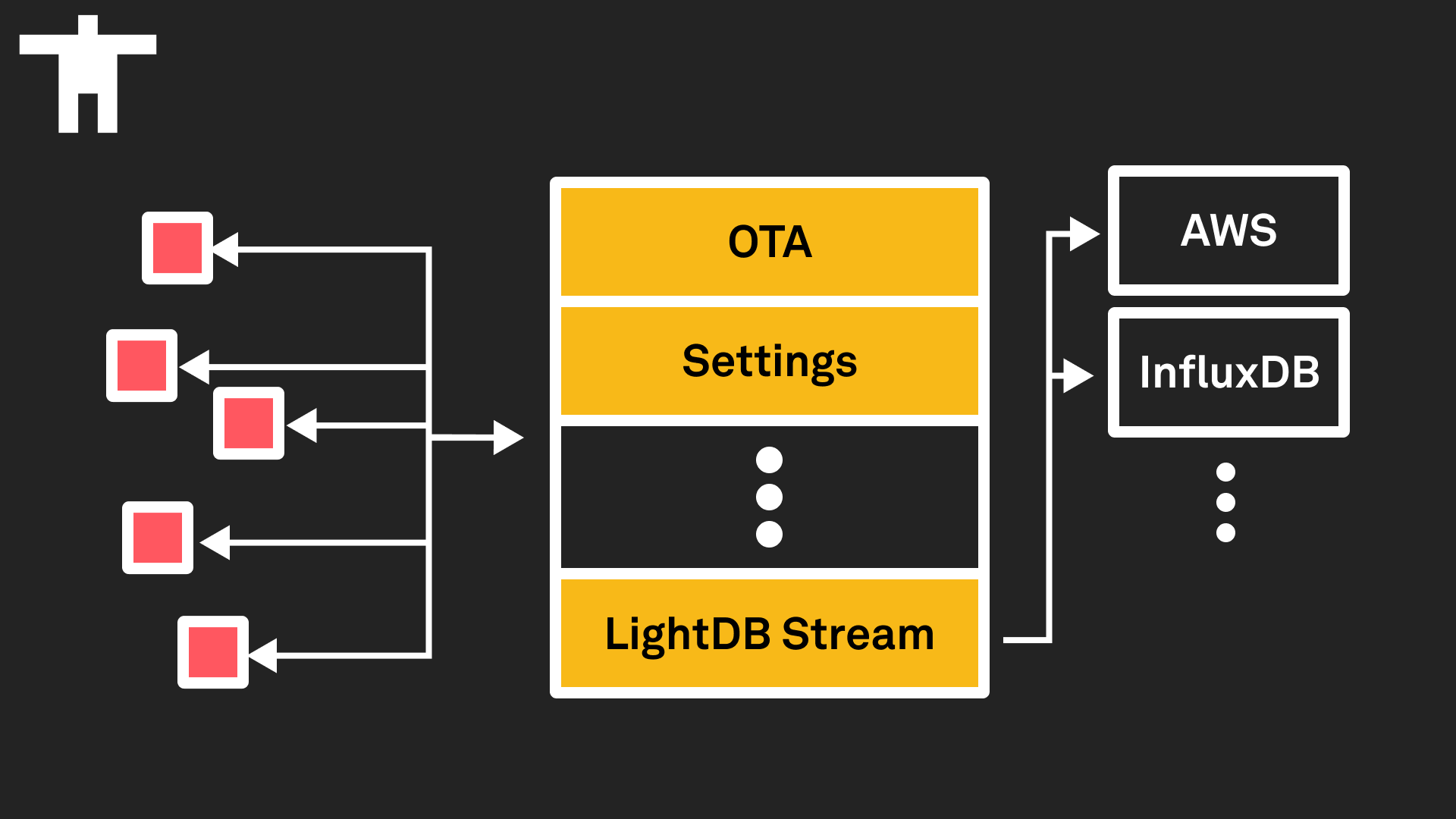

Previously, devices established a secure connection to Golioth, then talked to each of our many services directly. Every service includes opinions about how data should be formatted, how requests should be made, and how responses should be returned. If data needed to go somewhere other than Golioth, devices would either need to establish an additional connection (expensive) or use LightDB Stream’s Output Streams. The benefit of this structure, especially when combined with the Golioth Firmware SDK, was that developers incurred very little cognitive overhead when deciding how to format and deliver their data.

However, there are a few drawbacks with this approach. First, LightDB Stream only accepts semi-structured data in the form of JSON or CBOR. These data formats are flexible and easy to use when prototyping, but are less efficient than alternative options. This is especially true if binary data is being transmitted and devices are forced to represent it in a text-based format such as base64.

The second drawback is that LightDB Stream is persistent. Any stream data flowing through Golioth to an external destination was stored in LightDB Stream in addition to being passed along to its final location. This not only incurs costs for users, but also results in data being resident on both Golioth and the external system to which it is being forwarded. In environments with stringent compliance requirements, this can be a significant blocker.

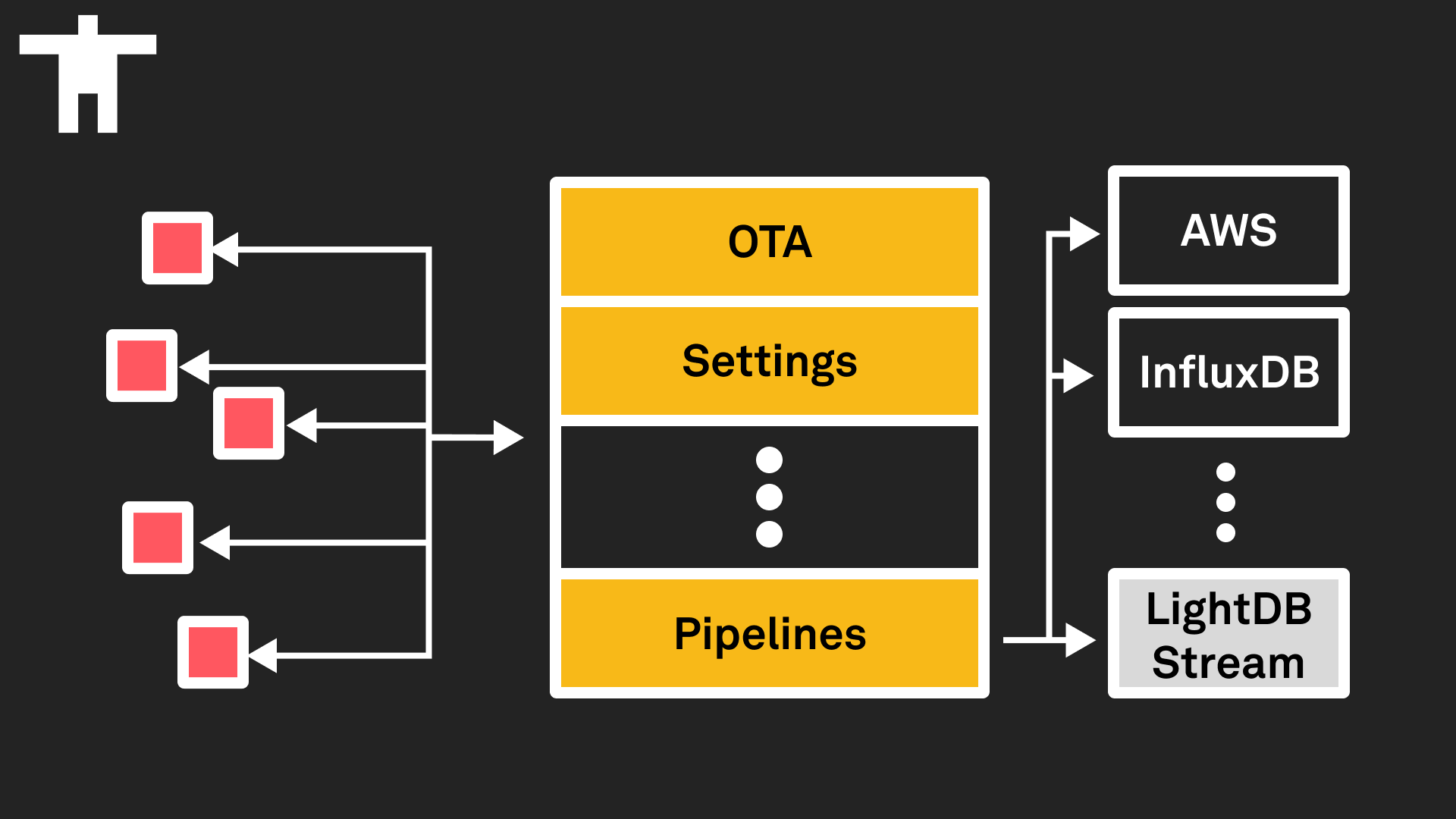

Pipelines moves LightDB Stream from the point of ingestion to the point of persistence, making it available as an optional data destination alongside those outside of the Golioth platform. There are still advantages to using LightDB Stream, especially when prototyping, due to its native integration into the platform. Data can be viewed in the Golioth console directly, and there is no need to create external accounts or credentials. We’ve created a new category for these type of offerings: application services. Users may leverage them to build out their product, but the Golioth platform enables substituting alternatives as well.

For users that previously enjoyed having data live in LightDB Stream and an external destination, they can continue leveraging that pattern by creating a pipeline that routes data to multiple locations, ensuring that data is delivered in the proper format to each by leveraging transformers.

filter:

path: /temp

content_type: application/cbor

steps:

- name: step-0

destination:

type: gcp-pubsub

version: v1

parameters:

topic: projects/my-project/topics/my-topic

service_account: $GCP_SERVICE_ACCOUNT

- name: step-1

transformer:

type: cbor-to-json

version: v1

- name: step-2

transformer:

type: inject-path

version: v1

destination:

type: lightdb-stream

version: v1

- name: step-3

destination:

type: influxdb

version: v1

parameters:

url: https://us-east-1-1.aws.cloud2.influxdata.com

token: $INFLUXDB_TOKEN

bucket: device_data

measurement: sensor_data

But Pipelines is more than just addressing the issues of LightDB Stream. Pipelines represents a paradigm shift in the architecture of the Golioth platform, which will enable additional features in the coming months. Rather than being constrained by an opinionated Golioth API, users can define their own APIs on Golioth by creating multiple pipelines with different path and content_type filters. This technical change reflects our renewed focus on Golioth’s core value proposition: device connectivity.

Rather than being constrained by an opinionated Golioth API, users can define their own APIs on Golioth by creating multiple pipelines with different path and content_type filters. This technical change reflects our renewed focus on Golioth’s core value proposition: device connectivity.

Behind the Scenes

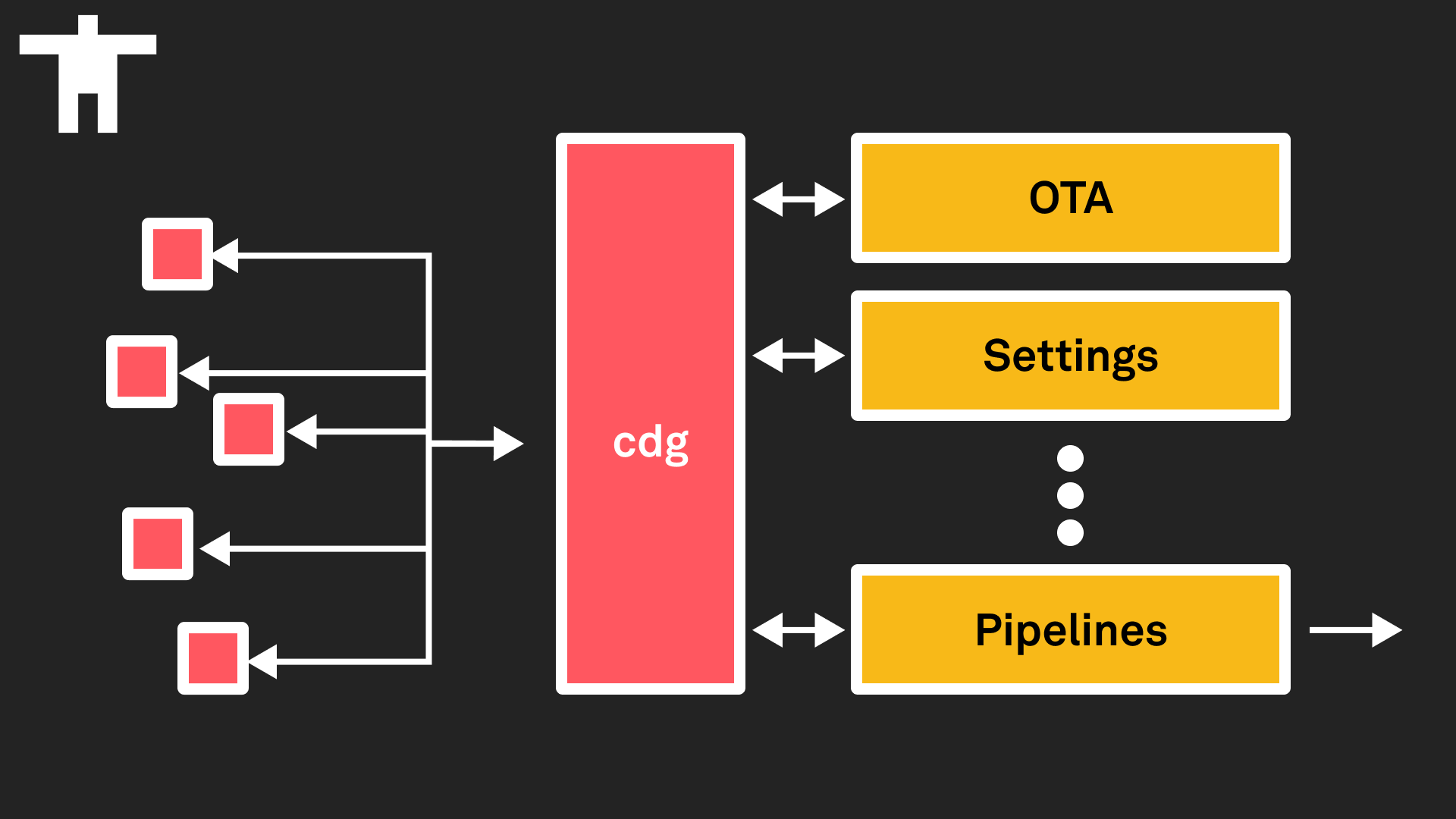

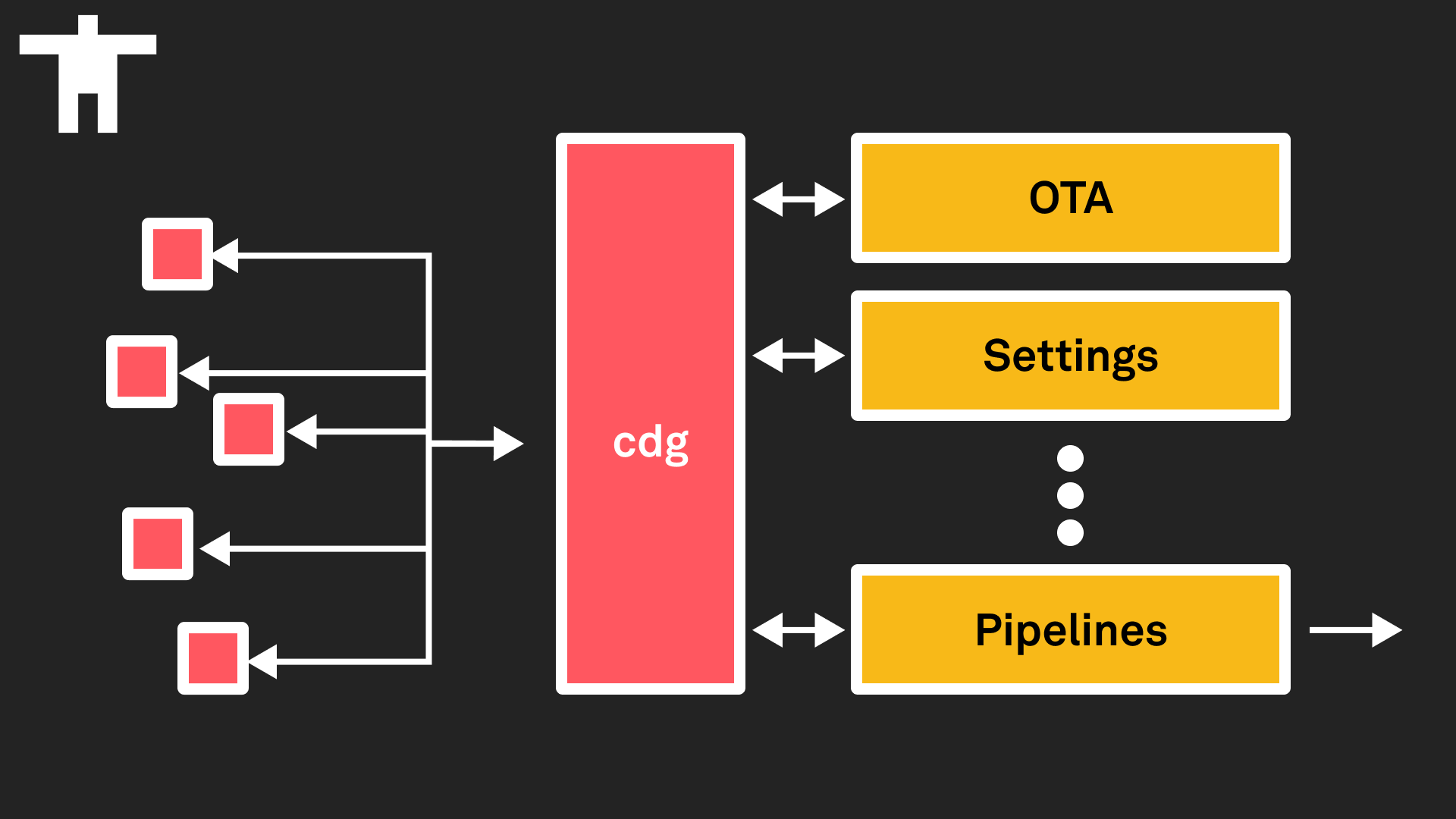

While Pipelines represents a large change in user-facing functionality, the smooth rollout was enabled by a silent launch months before. Internally, all device traffic on Golioth was directed through a new component in our cloud infrastructure, the Constrained Device Gateway (cdg), a protocol-agnostic proxy we developed that is responsible for managing connection state and brokering bidirectional communication between devices and services. Hints of this new component have been present since the introduction of the Golioth Simulator, which talks to device services via HTTP rather than CoAP.

With cdg in place, we were able to easily begin routing a subset of traffic to the infrastructure that powers Pipelines. This enabled the internal use of Pipelines in production weeks prior to general availability, and has allowed for continued use of Output Streams for projects that were previously leveraging the functionality. These projects now have the opportunity to be automatically migrated from Output Streams to Pipelines when they are ready.

It also means that the pace at which we are able to safely introduce new services and features is dramatically increased. Previously, authoring a new service required deep understanding of how to handle CoAP request / response patterns and observations. Furthermore, when a new protocol was introduced, every service would need to be updated with support. These communication patterns are now generalized. Services can easily leverage the internal cdg SDK to both respond to requests from devices and dispatch notifications to them, all using their preferred protocol.

While this functionality is only available for internal services today, it opens the door for bringing some of the user-defined capabilities of Pipelines, which are unidirectional, to bidirectional services. Doing so would further enable Golioth users to define their own platform on top of a single secure connection between devices and the cloud, eliminating the “all or nothing” nature of the decision whether to use an IoT platform or build one in-house.

The Road Ahead

Complexity comes with optionality, and as we continue expanding the realm of what is possible on Golioth, we will also be continuing to invest in observability. In the coming weeks we’ll be introducing new features that give additional visibility into what data is flowing through Pipelines and where it is being delivered. We’ll also be offering greater insight into how often frequently individual devices are connecting to Golioth, and how much much data they are sending.

Realizing the vision of making Golioth the universal connector for IoT means giving more choice to our users, and we are excited for how this new architecture enables that goal. If you’re building a connected device product, check out Golioth today!