IoT is all about data. How you choose to handle sending that data over the network can have a large impact on your bandwidth and power budgets. Golioth includes the ability to batch upload streaming data, which is great for cached readings that allows your device to stay in low power mode for more of the time. Today I’ll detail how to send IoT data in batches.

What is Batch Data?

Batch data simply means one payload that encompasses multiple sensors readings.

[

{

"ts": 1719592181,

"counter": 330

},

{

"ts": 1719592186,

"counter": 331

},

{

"ts": 1719592191,

"counter": 332

}

]

The example above shows three readings, each passing a counter value the represents a sensor reading, along with a timestamp for when that reading was taken.

Sending Batch Data from an IoT Device

The sample firmware can be found at the end of the post, but generally speaking, the device doesn’t need to do anything different to send batch data. The key is to format the data as a list of readings, whether you’re sending JSON or CBOR.

int err = golioth_stream_set_async(client,

"",

GOLIOTH_CONTENT_TYPE_JSON,

buf,

strlen(buf),

async_push_handler,

NULL);

We call the Stream data API above. The client and data type are passed as the first two arguments, then the buffer holding the data and the buffer length are supplied. The last two parameters are a callback function and an optional user data pointer.

Routing Batch Data using a Golioth Pipeline

Batch data will be automatically sorted out by the Golioth servers based on the pipeline you use.

filter:

path: "*"

content_type: application/json

steps:

- name: step0

destination:

type: batch

version: v1

- name: step1

destination:

type: lightdb-stream

version: v1

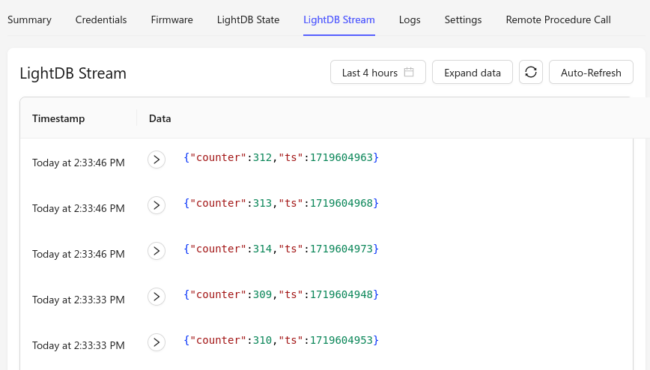

This example pipeline listens for JSON data coming in on any stream path. In step0 it “unpacks” the batch data into individual readings. In step1 the individual readings are routed to Golioth’s LightDB stream. Here’s what that looks like:

Note that all three readings are coming in with the same server-side timestamp. The device timestamp is preserved in the data, but you can also use Pipelines to tell Golioth to use the embedded timestamps.

Batch Data with Timestamp Extract

For this example we’re using a very similar pipeline, with one additional transformer to extract the timestamp from the readings and use it as the LightDB Stream timestamp.

filter:

path: "*"

content_type: application/json

steps:

- name: step0

destination:

type: batch

version: v1

- name: step1

transformer:

type: extract-timestamp

version: v1

destination:

type: lightdb-stream

version: v1

Note that we didn’t even need an additional step, but simply added the transformer to the step that already set lightdb-stream as the destination.

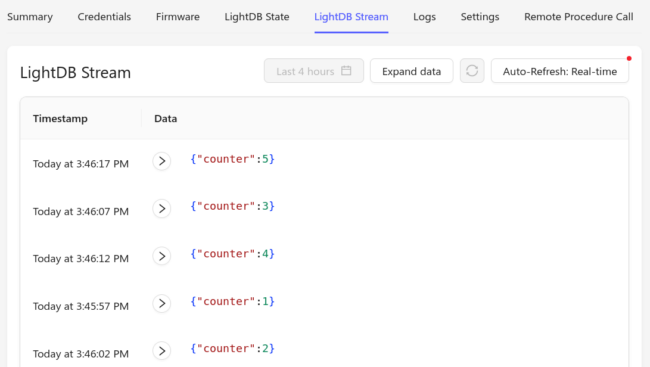

You can see that the Linux epoch formatted timestamp has been popped out of the data and assigned to the LightDB timestamp. Extracting the timestamp is not unique to Golioth’s LightDB Stream service.

Streaming data may be routed anywhere you want it. For instance, if you wanted to send your data to a webhook, just use the webhook destination. If you included the extract-timestamp transformer, you data will arrive at the webhook with the timestamps from your device as part of the metadata instead of nested in the JSON.object.

Using a Special Path for Batch Data

What happens if your app wants to send other types of streaming data beyond batch data? The batch destination will automatically drop data that isn’t a list of data objects. But you might like to be more explicit about where you send data and for that you can easily create a path to receive batch data.

filter:

path: "/batch/"

content_type: application/json

steps:

- name: step0

destination:

type: batch

version: v1

- name: step1

transformer:

type: extract-timestamp

version: v1

destination:

type: lightdb-stream

version: v1

This pipeline is nearly the same as before with the only change on line 2 where the * wildcard was removed from path and replaced with "/batch/". Now we can update the API call in the device firmware to target that path:

int err = golioth_stream_set_async(client,

"batch",

GOLIOTH_CONTENT_TYPE_JSON,

buf,

strlen(buf),

async_push_handler,

NULL);

Although the result hasn’t changed, this does make the intent of the firmware more clear, and it differentiates the intent of this pipeline from others.

Sample Firmware

This is a quick sample firmware I made to use while writing this post. It targets the nrf9160dk. One major caveat is that the function that pulls time from the cellular network is quite rudimentary and should be replaced on anything that you plan to use in production.

To try it out, start from the Golioth Hello sample and replace the main.c file. This post was written using v0.14.0 of the Golioth Firmware SDK.

Wrapping Up

Batch data upload is a common request in the IoT realm. Golioth has not only the ability to sort out your batch data uploads, but to route them where you want and even to transform that data as needed. If you want to know more about what Pipelines brings to the party, check out the Pipelines announcement post.

No comments yet! Start the discussion at forum.golioth.io