Today, we are thrilled to announce the launch of Golioth for AI, a comprehensive set of features designed to simplify and enhance the integration of AI into IoT products.

At Golioth, we envision a future where AI and IoT converge to create smarter, more efficient systems that can learn, adapt, and improve over time. The fusion of AI and IoT has the potential to unlock unprecedented levels of innovation and automation across various industries. However, integrating AI into IoT devices can be complex and challenging, requiring robust solutions for managing models, training data, and performing inference.

Today, at Golioth, we are addressing these challenges head-on. Our new set of features focuses on three core pillars: training data, model management, and inference. By streamlining these processes, we aim to empower developers and businesses to quickly add AI to their IoT projects, where it was not readily possible to do so before.

Training Data: Unlocking the Potential of IoT Data

At Golioth, we recognize that IoT devices generate rich, valuable data that can be used to train innovative AI models. However, this data is often inaccessible, in the wrong format, or difficult to stream to the cloud. We’re committed to helping teams extract this data and route it to the right destinations for training AI models that solve important physical world problems.

We’ve been building up to this with our launch of Pipelines, and new destinations and transformers have been added every week since. Learn more about Pipelines in our earlier announcement.

In v0.14.0 of our Firmware SDK, we added support for block-wise uploads. This new capability allows for streaming larger payloads, such as high-resolution images and audio, to the cloud. This unlocks incredible potential for new AI-enabled IoT applications, from connected cameras streaming images for security and quality inspection to audio-based applications for voice recognition and preventative maintenance for industrial machines.

For an example of uploading images or audio files to Golioth for training, see:

We’ve recently added three new object storage destinations for Pipelines:

These storage solutions are perfect for handling the rich media data essential for training AI models, ensuring your training set is always up to date with live data from in-field devices.

Partnership with Edge Impulse

Today, we’re also excited to announce our official partnership with Edge Impulse, a leading platform for developing and optimizing AI for the edge. This partnership allows streaming of IoT data from Golioth to Edge Impulse for advanced model training, fine-tuned for microcontroller class devices. Using Edge Impulse’s AWS S3 Data Acquisition, you can easily integrate with Golioth’s AWS S3 Pipelines destination by sharing a bucket for training data:

filter:

path: "*"

content_type: application/octet-stream

steps:

- name: step-0

destination:

type: aws-s3

version: v1

parameters:

name: my-bucket

access_key: $AWS_ACCESS_KEY

access_secret: $AWS_ACCESS_SECRET

region: us-east-1

This streamlined approach enables you to train cutting-edge AI models efficiently, leveraging the power of both the Golioth and Edge Impulse platforms. For a full demonstration of using Golioth with Edge Impulse see: https://github.com/edgeimpulse/example-golioth

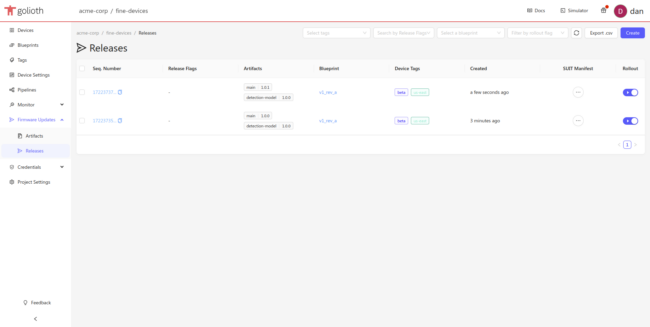

Model Management: Flexible OTA Updates

Deploying AI models to devices is crucial for keeping them updated with the latest capabilities and adapting to new use cases and edge cases as they arise. However, deploying a full OTA firmware update every time you need to update your AI model is inefficient and costly.

To address this, Golioth’s Over-the-Air (OTA) update system has been enhanced to support a broader range of artifact types, including AI models and media files.

Our updated OTA capabilities ensure that your AI models can be deployed and updated independently of firmware updates, making the process more efficient and streamlined. This allows models to be updated without having to perform a complete firmware update, saving bandwidth, reducing battery consumption, and minimizing downtime while ensuring your devices always have the latest AI capabilities.

We’ve put together an example demonstrating deploying a TensorFlow Lite model here: Deploying TensorFlow Lite Model.

Flox Robotics is already leveraging our model management capabilities to deploy AI models that detect wildlife and ensure their safety. Their AI deterrent systems prevent wildlife from entering dangerous areas, significantly reducing harm and preserving ecosystems. Read the case study.

Inference: On-Device and in the Cloud

Inference is the core of AI applications, and with Golioth, you can now perform inference both on devices and in the cloud. On-device inference is often preferred for applications like real-time monitoring, autonomous systems, and scenarios where immediate decision-making is critical due to its lower latency, reduced bandwidth usage, and ability to operate offline.

However, sometimes inference in the cloud is ideal or necessary for tasks requiring significant processing power, such as high-resolution image analysis, complex pattern recognition, and large-scale data aggregation, leveraging more powerful computational resources and larger models.

Golioth Pipelines now supports inference transformers and destinations, integrating with leading AI platforms including Replicate, Hugging Face, OpenAI, and Anthropic. Using our new webhook transformer, you can leverage these platforms to perform inference within your pipelines. The results of the inference are then returned back to your pipeline to be routed to any destination. Learn more about our new webhook transformer.

Here is an example of how you can configure a pipeline to send audio samples captured on a device to the Hugging Face Serverless Inference API, leveraging a fine-tuned HuBERT model for emotion recognition. The inference results are forwarded as timeseries data to LightDB Stream.

filter:

path: "/audio"

steps:

- name: emotion-recognition

transformer:

type: webhook

version: v1

parameters:

url: https://api-inference.huggingface.co/models/superb/hubert-large-superb-er

headers:

Authorization: $HUGGING_FACE_TOKEN

- name: embed

transformer:

type: embed-in-json

version: v1

parameters:

key: text

- name: send-lightdb-stream

destination:

type: lightdb-stream

version: v1

Golioth is continually releasing new examples to highlight the applications of AI on device and in the cloud. Here’s an example of uploading an image and configuring a pipeline to describe the image with OpenAI and send the transcription result to Slack:

filter:

path: "/image"

steps:

- name: jpeg

transformer:

type: change-content-type

version: v1

parameters:

content_type: image/jpeg

- name: url

transformer:

type: data-url

version: v1

- name: embed

transformer:

type: embed-in-json

version: v1

parameters:

key: image

- name: create-payload

transformer:

type: json-patch

version: v1

parameters:

patch: |

[

{

"op": "add",

"path": "/model",

"value": "gpt-4o-mini"

},

{

"op": "add",

"path": "/messages",

"value": [

{

"role": "user",

"content": [

{

"type": "text",

"text": "What's in this image?"

},

{

"type": "image_url",

"image_url": {

"url": ""

}

}

]

}

]

},

{

"op": "move",

"from": "/image",

"path": "/messages/0/content/1/image_url/url"

},

{

"op": "remove",

"path": "/image"

}

]

- name: explain

transformer:

type: webhook

version: v1

parameters:

url: https://api.openai.com/v1/chat/completions

headers:

Authorization: $OPENAI_TOKEN

- name: parse-payload

transformer:

type: json-patch

version: v1

parameters:

patch: |

[

{"op": "add", "path": "/text", "value": ""},

{"op": "move", "from": "/choices/0/message/content", "path": "/text"}

]

- name: send-webhook

destination:

type: webhook

version: v1

parameters:

url: $SLACK_WEBHOOK

For a full list of inference examples see:

Golioth for AI marks a major step forward in integrating AI with IoT. This powerful collection of features is the culmination of our relentless innovation in device management and data routing, now unlocking advanced AI capabilities like never before. Whether you’re an AI expert or just starting your AI journey, our platform provides the infrastructure seamlessly train, deploy, and manage AI models with IoT data.

We’ve assembled a set of exciting examples to showcase how these features work together, making it easier than ever to achieve advanced AI integration with IoT. We can’t wait to see the AI innovations you’ll create using Golioth.

For detailed information and to get started, visit our documentation and explore our examples on GitHub.

Thank you for joining us on this exciting journey. Stay tuned for more updates and build on!

No comments yet! Start the discussion at forum.golioth.io